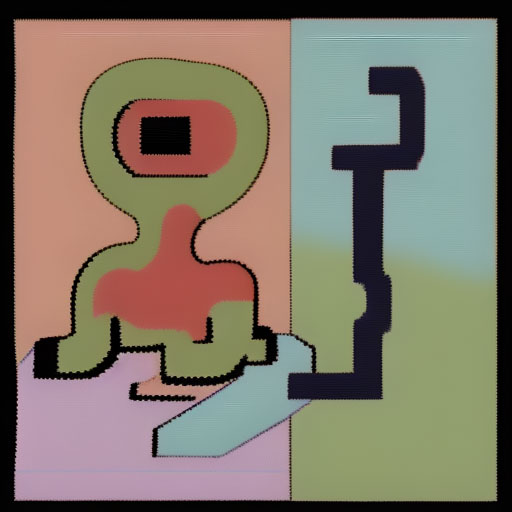

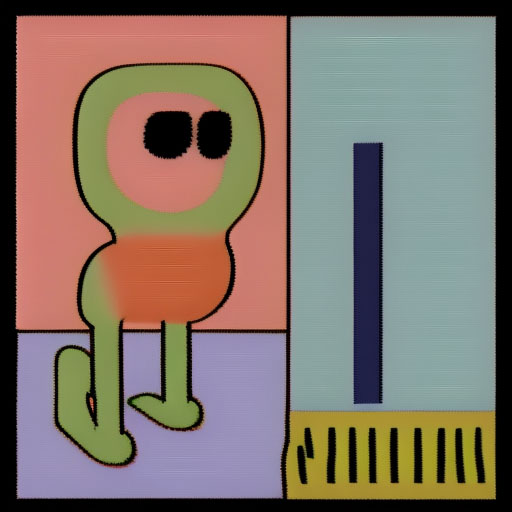

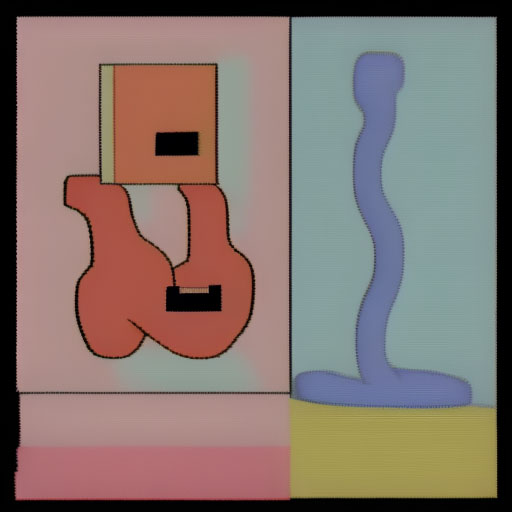

The ‘same’ image across different seeds

A seed is what creates the initial block of noise where the model ‘finds’ the image that it generates. The generation process is actually a denoising process, where the model has trained to remove noise from noisy images. It starts with pure noise and progressively removes noise. The ‘guidance’ that you provide (typically in the form of a text prompt) introduces a bias in the generation process that steers it towards the image in the model’s latent space that ‘means’ the same thing as the text prompt.

There is no meaningful signal in the noise that the process starts off on, but each of the 4294967296 seeds creates a distinct (and meaningless) scattering of noise. Each of these dinstinct arrangements of noise will provide a distinct starting place for the model’s algorithmic process to unfold. This is why each seed will lead to a different (but similar) output image.

In the set of images below, you can see how much variation there is in the ‘same’ output image depending on which seeds its denoising process started from. All images were made using identical settings, except for which seed they used.